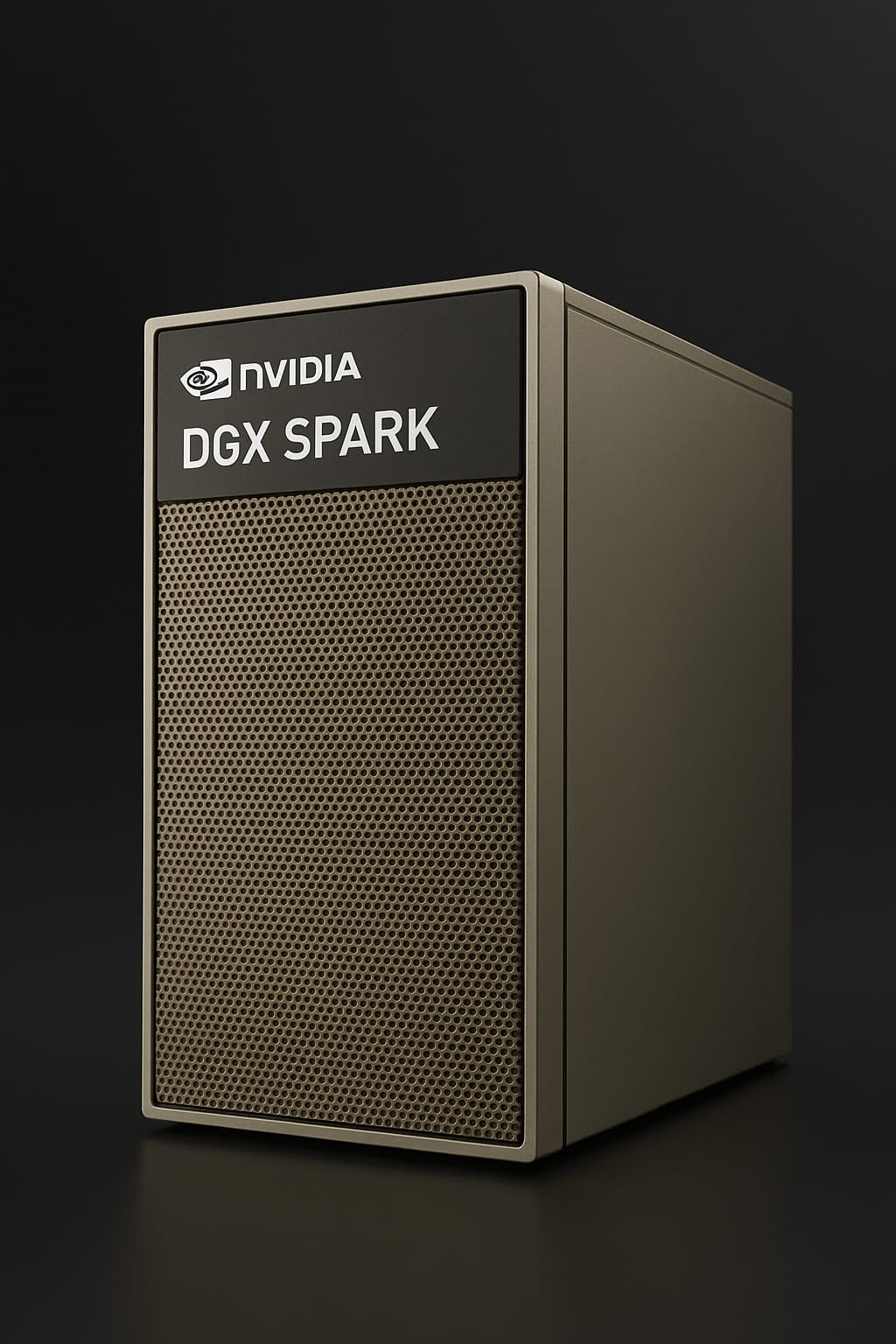

NVIDIA DGX Spark brings data center-level AI capabilities to your desktop for $2,999-$3,999, enabling developers to run and fine-tune models up to 200 billion parameters locally. This compact powerhouse, featuring the revolutionary GB10 Grace Blackwell Superchip with 128GB of unified memory, represents a paradigm shift in how AI development can be approached—moving from cloud-dependent workflows to secure, local experimentation.

Announced in March 2025 and available for delivery in Summer 2025, DGX Spark addresses a critical gap in the AI development ecosystem. While cloud computing has democratized access to AI resources, concerns about data privacy, recurring costs, and latency have created demand for powerful local alternatives. NVIDIA’s solution delivers 1 petaFLOP of AI performance in a form factor smaller than most gaming consoles, fundamentally changing who can participate in advanced AI development.

For developers, researchers, and students previously limited by cloud computing costs or data sensitivity requirements, DGX Spark offers unprecedented freedom. The system seamlessly bridges the gap between local prototyping and enterprise-scale deployment, using the same Grace Blackwell architecture found in NVIDIA’s data center offerings. This architectural consistency means code developed on your desktop can scale directly to production environments without modification.

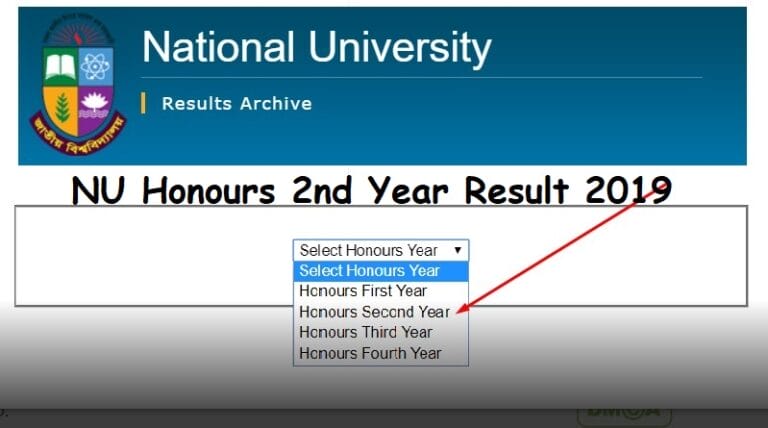

Understanding the GB10 Grace Blackwell architecture powering DGX Spark

At the heart of DGX Spark lies the NVIDIA GB10 Grace Blackwell Superchip, a system-on-chip design that integrates a 20-core ARM CPU with Blackwell generation GPU technology. This unified architecture eliminates traditional CPU-GPU bottlenecks through NVIDIA’s NVLink-C2C interconnect, delivering 5x the bandwidth of PCIe Gen 5. The result is a system that can handle AI workloads previously reserved for rack-mounted servers.

The CPU component features 10 high-performance Cortex-X925 cores paired with 10 power-efficient Cortex-A725 cores, providing the computational flexibility needed for diverse AI workflows. The GPU side incorporates fifth-generation Tensor Cores with FP4 precision support, enabling the system to achieve its remarkable 1 petaFLOP performance while maintaining reasonable power consumption at just 170 watts.

Perhaps most importantly, the 128GB of LPDDR5X unified memory allows DGX Spark to load and run large language models that would typically require multiple traditional GPUs. With 273 GB/s of memory bandwidth, developers can work with models like LLaMA 2 70B, DeepSeek-V2, and Mistral locally, performing inference at practical speeds of approximately 3 tokens per second for quantized 70B parameter models.

The architecture’s efficiency extends beyond raw performance metrics. By implementing a unified memory model similar to Apple Silicon but optimized for AI workloads, DGX Spark eliminates the need for constant data transfers between CPU and GPU memory spaces. This design choice particularly benefits iterative development workflows where models are frequently loaded, modified, and tested.

Real-world applications transforming industries with local AI

Healthcare organizations are among the early adopters of DGX Spark, using it to develop diagnostic AI models while maintaining strict HIPAA compliance. A prominent medical research facility recently deployed multiple units to create a secure environment for analyzing patient imaging data, developing custom models that can identify rare conditions without exposing sensitive information to cloud services. The ability to fine-tune models on proprietary datasets locally has accelerated their research timeline from months to weeks.

Financial services firms leverage DGX Spark for algorithmic trading development and risk analysis. One major investment bank reported reducing their AI development costs by 60% by moving initial model development from cloud services to DGX Spark clusters. The system’s ability to run complex financial models locally enables rapid iteration on trading strategies while maintaining the security required for proprietary algorithms.

In robotics and autonomous systems, DGX Spark serves as the development platform for next-generation edge AI applications. Robotics teams use the platform with NVIDIA’s Isaac framework to develop perception and control algorithms that will eventually run on embedded systems. The consistent architecture between development and deployment platforms significantly reduces the complexity of moving from prototype to production.

Educational institutions have embraced DGX Spark as a teaching tool for advanced AI courses. Universities report that giving students hands-on access to hardware capable of running state-of-the-art models has transformed their AI curriculum. Students can now experiment with large language models, fine-tune them for specific tasks, and understand the computational requirements of modern AI—experiences previously limited to well-funded research labs.

Technical deep dive into specifications and capabilities

DGX Spark’s compact 150mm x 150mm x 50.5mm form factor belies its sophisticated internal architecture. The system integrates multiple cutting-edge technologies to deliver workstation-class performance in a desktop package weighing just 1.2 kilograms. Understanding these specifications helps developers maximize the platform’s capabilities for their specific use cases.

The connectivity options reflect modern development needs with four USB4 Type-C ports supporting up to 40 Gb/s data transfer, essential for connecting high-speed storage arrays or external GPUs. The integrated ConnectX-7 Smart NIC enables 200GbE networking when clustering multiple units, allowing two DGX Spark systems to work together on models up to 405 billion parameters. This clustering capability transforms the economics of large model development for smaller organizations.

Storage configurations include either 1TB or 4TB NVMe M.2 SSDs with hardware encryption, ensuring data security at rest. The system’s thermal design maintains operation below 35 dB, making it suitable for office environments unlike traditional server hardware. Power delivery through USB Type-C simplifies deployment while the 170W consumption keeps operating costs manageable.

Software support encompasses the entire NVIDIA AI ecosystem, with DGX OS (based on Ubuntu 22.04) providing a familiar Linux environment optimized for AI workloads. Pre-installed frameworks include PyTorch, TensorFlow, and JAX with native ARM optimizations. The inclusion of NVIDIA RAPIDS accelerates data science workflows, while access to the NGC catalog provides thousands of pre-trained models and containerized applications.

Comparing DGX Spark to alternative AI development platforms

When evaluating DGX Spark against alternatives, the comparison extends beyond raw specifications to ecosystem considerations. The Apple Mac Studio M4 Max offers higher memory bandwidth at 546 GB/s versus DGX Spark’s 273 GB/s, potentially delivering faster performance for certain workloads. However, Mac Studio lacks native CUDA support, limiting access to the vast ecosystem of NVIDIA-optimized AI tools and frameworks that many developers rely upon.

AMD’s Ryzen AI MAX+ 395 platform, particularly in systems like the Framework Desktop, presents a compelling value proposition at around $2,329 for 128GB configurations. The x86 architecture ensures broader software compatibility, including Windows support. Yet AMD’s ROCm ecosystem remains significantly less mature than CUDA, with many popular AI frameworks offering limited or experimental support for AMD GPUs.

For those requiring maximum performance, NVIDIA’s own RTX 6000 Ada or forthcoming RTX 6000 Blackwell GPUs deliver superior raw compute power. However, these professional GPUs typically offer only 24-48GB of memory, insufficient for many large language models. The DGX Spark’s 128GB unified memory enables work with models that would require multiple professional GPUs, fundamentally changing the cost equation for model development.

Cloud alternatives like AWS EC2 P4d instances or Google Cloud A100 nodes offer virtually unlimited scalability but incur ongoing costs that can quickly exceed DGX Spark’s purchase price. A typical large model fine-tuning project running for several weeks on cloud infrastructure can cost $10,000-$20,000, making DGX Spark’s one-time investment attractive for organizations with consistent AI development needs.

Pricing strategies and total cost of ownership analysis

Understanding DGX Spark’s pricing requires looking beyond the initial $2,999-$3,999 purchase price to consider total cost of ownership. The base configuration with 1TB storage at $2,999 targets budget-conscious developers and educational institutions, while the 4TB Founders Edition at $3,999 caters to professionals requiring additional storage for large datasets and model checkpoints.

Operating costs remain remarkably low with 170W power consumption translating to approximately $150-$200 annual electricity costs under typical development workloads. This compares favorably to cloud computing where similar capabilities might cost $500-$1,000 monthly. For organizations running AI workloads consistently, DGX Spark typically achieves ROI within 4-6 months.

Partner pricing varies by region and configuration, with ASUS’s Ascent GX10 offering a lower entry point at $2,999 for similar specifications. European pricing typically runs higher at €3,689 due to import duties and VAT. Educational discounts through NVIDIA’s academic program can reduce costs by 10-20%, making the platform more accessible to universities and research institutions.

Hidden cost savings emerge from reduced cloud egress fees, eliminated data transfer times, and improved developer productivity through faster iteration cycles. Organizations report 3-5x productivity improvements when developers can experiment locally without worrying about cloud costs or waiting for resource allocation. The ability to maintain proprietary models and datasets on-premises also eliminates potential compliance and security audit costs.

Implementation guide for maximizing DGX Spark performance

Successful DGX Spark deployment begins with understanding your specific use case requirements. For organizations focused on model inference, the single-unit configuration handles models up to 200 billion parameters effectively. Those pursuing model fine-tuning should consider the practical limit of 70 billion parameters for reasonable training times, though smaller models in the 7-13 billion parameter range offer the best balance of capability and performance.

Initial setup involves minimal configuration thanks to the pre-installed DGX OS and AI software stack. Developers familiar with Linux environments can be productive within hours of unboxing. The system automatically detects and configures the GB10 superchip, with NVIDIA drivers and CUDA toolkit pre-installed and optimized. Network configuration for clustering requires only connecting the included QSFP cable between units and running the DGX clustering setup utility.

Optimizing workload performance requires understanding the unified memory architecture. Unlike traditional GPU systems where data must be explicitly transferred between CPU and GPU memory, DGX Spark’s unified design allows seamless data access. This particularly benefits preprocessing pipelines and mixed-precision training where frequent data movement traditionally creates bottlenecks. Developers should structure applications to take advantage of this architectural advantage.

For production deployment paths, DGX Spark integrates seamlessly with NVIDIA’s broader ecosystem. Models developed locally can be containerized using NVIDIA Container Toolkit and deployed to DGX Cloud or on-premises DGX systems without modification. This consistency from development to production represents one of the platform’s most compelling advantages, eliminating the traditional friction of moving from prototype to deployment.

Future-proofing your AI infrastructure investment

NVIDIA’s public roadmap reveals DGX Spark as the entry point to a comprehensive AI infrastructure strategy. The 2026 introduction of “Rubin” architecture GPUs with HBM4 memory promises significant performance improvements while maintaining software compatibility. Organizations investing in DGX Spark today can expect their code and workflows to remain relevant through multiple hardware generations.

Software evolution continues at a rapid pace with monthly updates to the DGX OS and AI frameworks. Recent additions include optimized support for emerging model architectures like Mixture of Experts (MoE) and enhanced quantization techniques that allow even larger models to run efficiently. The platform’s ARM architecture positions it well for the industry’s gradual shift away from x86, particularly in AI-focused workloads where power efficiency becomes increasingly critical.

The clustering capability hints at future scalability options currently under development. While officially limited to two-unit configurations, NVIDIA’s engineering teams are exploring mesh networking topologies that could enable larger clusters for departmental deployments. This would position DGX Spark as a building block for modular AI infrastructure that scales with organizational needs.

Making the investment decision for your organization

DGX Spark represents a strategic investment for organizations serious about AI development. The platform excels for teams requiring secure, local development environments, those working with proprietary datasets, and educational institutions teaching advanced AI concepts. The ability to develop with the same architecture used in production environments significantly reduces deployment friction and accelerates time-to-market for AI applications.

Organizations should carefully evaluate their workload requirements against DGX Spark’s capabilities. While the 273 GB/s memory bandwidth may limit performance for some memory-intensive tasks, the 128GB capacity enables work with models that would otherwise require expensive multi-GPU systems. The platform’s sweet spot lies in model development, fine-tuning, and inference for models in the 7-70 billion parameter range.

The decision ultimately comes down to development patterns and security requirements. Teams spending more than $1,000 monthly on cloud AI compute will likely see rapid ROI from DGX Spark. Those with strict data residency requirements or working with sensitive information will find the platform’s local processing capabilities invaluable. As AI becomes increasingly central to business operations, having dedicated development hardware provides both flexibility and control that cloud-only strategies cannot match.

With its Summer 2025 availability approaching and reservations currently open, interested organizations should evaluate their AI development roadmaps against DGX Spark’s capabilities. The convergence of accessible pricing, enterprise-grade architecture, and comprehensive software support makes this an inflection point in AI development accessibility. For many organizations, DGX Spark will serve as the bridge between experimental AI projects and production-ready applications, democratizing access to advanced AI development while maintaining the security and control that modern businesses require.